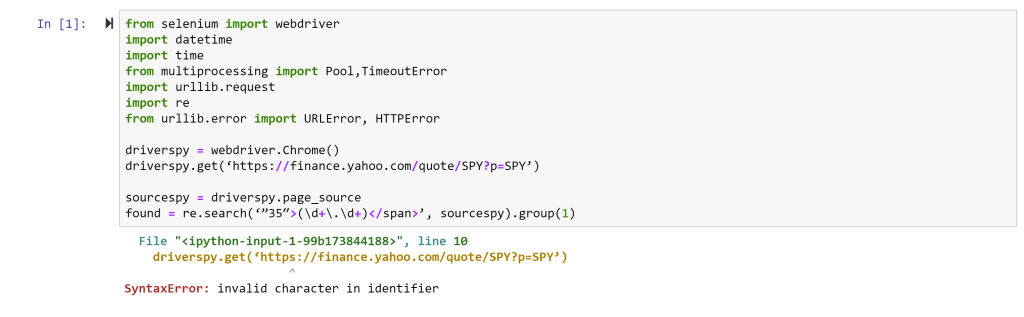

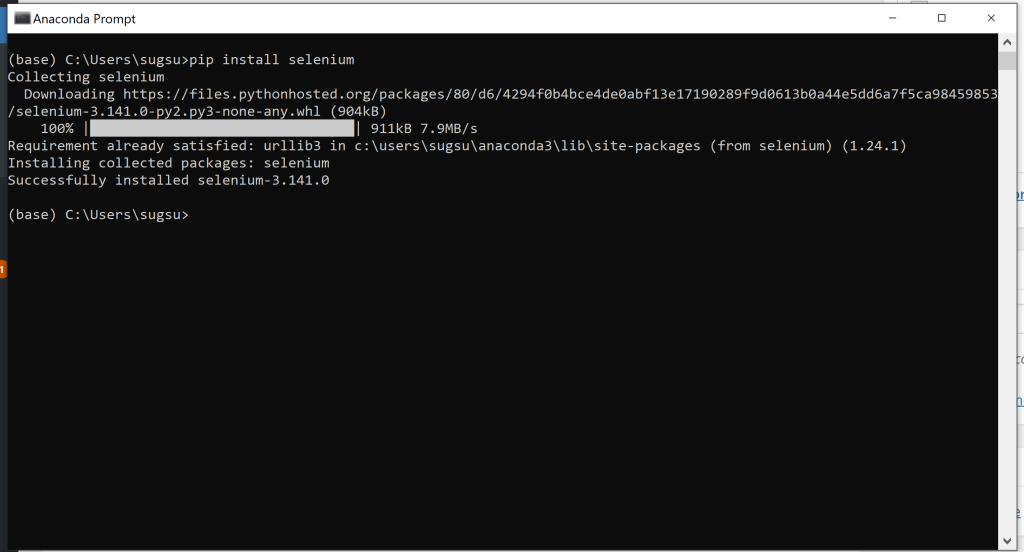

Why is it worth learning a programming language in Finance and how would one motivate students to make the push to do it? First, my firm belief is that high level coding (in a language like Python or VBA) is going to be as required for financial professionals in 5-10 years as Excel knowledge is today. Second, many investment banking analyst programs (and other analyst programs) already have a Python module – scraping the latest financials (or some other reporting) from Edgar need not be as painful as it used be 20-30 years ago. A few lines of code, executed quarterly, could save an analyst hours, if not days, of time. Finally, even if one does not plan to become a coder, it is worth knowing what coding can do for you – for example, when students move up to middle management, and have analysts of their own, they can potentially direct them to labor saving techniques using widely available programming languages.

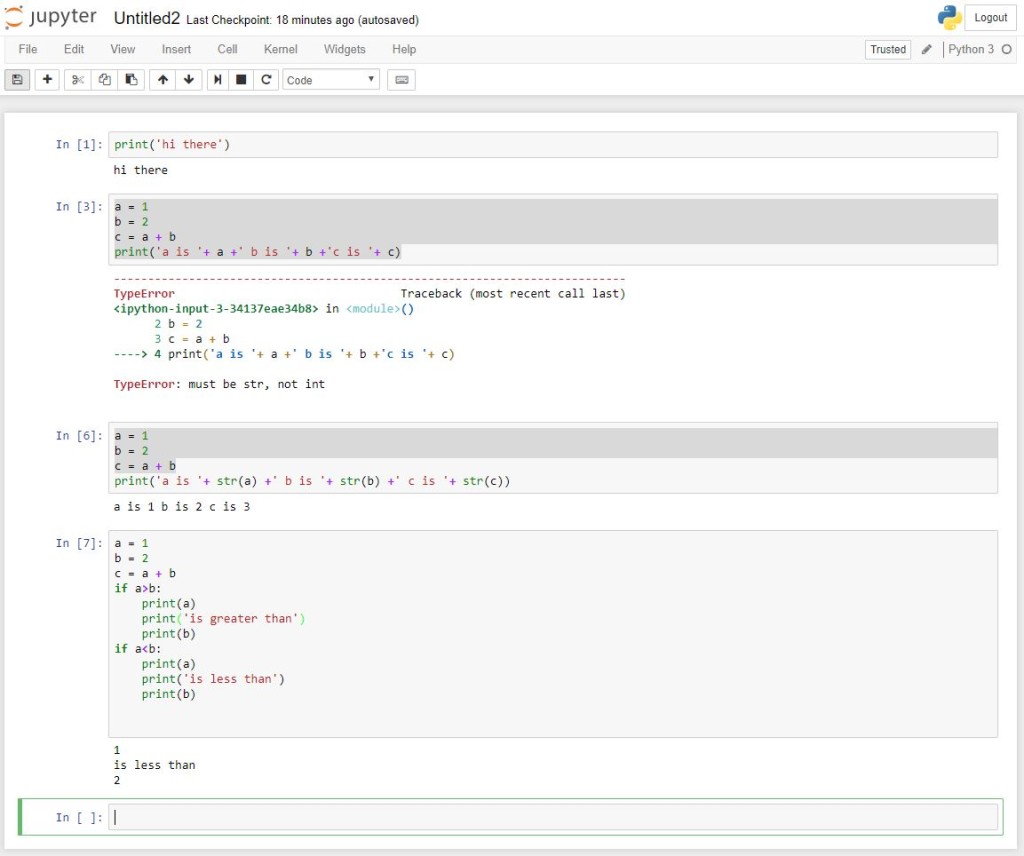

So, if we start with the position that high level coding is useful in finance, what would be the best way to motivate a smart, finance student to learn some coding? (Note that I distinguish statistical packages like STATA or SAS from programming languages, although programming can be done in in STATA or SAS, I’m thinking traditional programming with loops and conditional statements)

One exercise I’ve found to work well in my class is generating an efficient frontier. Given a set of assets and historical returns, it is easy to use Excel to do mean variance optimization to generate individual points on the mean variance frontier. Using Excel’s “solver” tool to generate weights that maximize expected returns for a given level of risk, or minimize risk for a given expected return is quite easy and intuitive for most of my students. However, generating the entire curve is a bit beyond Excel’s usual bag of tricks. It would require running a bunch of solver optimizations for different levels of risk, and then plotting all the points. In class, I divide my students in N groups with each group doing the solver optimization for a given level of risk.

This sets up nicely to introduce a piece of Python coding which loops through a set of standard deviations and optimizes asset weights to generate max expected returns for each standard deviation – essentially what the different groups were doing in class! Students seem to understand this advantage of coding and it serves to tee up using Python for more complex analysis later in the class.